Correlated Structured Logging on Azure Functions

Introduction

If you are reading this post, chances are that you know that things can go wrong and thus logging can be very useful when a solution is in production to monitor or troubleshoot it. But, not all logs are equal. While you can have enough information available, unstructured logging can be hard to read, troubleshoot or analyse. On the other hand, well-defined structured logging should allow you to search, filter and analyse your logs for better monitoring and troubleshooting. When Azure Functions run in the background, logging is even more important. Additionally, as Azure functions are meant to be single-purpose nano-services, in many scenarios, your payload would be processed by multiple functions. Therefore, a well-defined, consistent structured logging can be crucial to be able to troubleshoot a serverless-based solution spanning across multiple functions.

In this post, I’ll show how to implement correlated structured logging on Azure Functions across multiple functions with Service Bus messages using ILogger and Application Insights.

Scenario

To build this demo, I’ll use a scenario from Farm-To-Table, a company that delivers by drones fresh produce directly from the farm to your table. They are implementing the Pub-Sub pattern to process orders. Orders are published via an http endpoint (publisher interface), then put into a topic, and finally processed by subscriber interfaces. Additionally, there are some business rules applied on the subscriber interfaces, which can have an impact on the processing. Farm-To-Table want to make sure an appropriate logging is in place, so whenever there is an issue with an order, they can troubleshoot it. They know that Application Insights is the way to go for serverless logging.

Before we start

Before we start building the solution, it’s important that you understand how Azure Functions Logging with Application Insights works. I would recommend you to read the official documentation, and will only highlight some key points below.

- You can configure Logging Level depending on the needs.

- Logging is asynchronous is aggregated, so you might loose some log events.

- You need to configure sampling according to your needs so you don’t lose important log events, but also don’t impact your app performance.

- Structured logging on Azure Function requires ILogger.

- Application Insights has a retention policy and logged data is not encrypted at rest.

Good Practices for Structured Logging

As mentioned above, structured logging should ease querying and analysing logs. In order to provide these benefits, there are some practices described below that you might want to consider when implemented structured logging.

- Use Event Ids: Associate a unique Id to a specific log event, so you can filter or analyse the occurrence of a particular event. Event Ids can also be used to create alerts. To make it more useful, keep a dictionary of your event ids, ideally in your code, but also accessible to the Ops team, e.g. in a wiki.

- Standardise your logging templates. Structured logging requires a structure template. Define the templates you can use for different events. Try to keep your structured logging as consistent as possible.

- Use a Correlation Id so different events across different components can be correlated.

- Log a Status for relevant events so you can filter or analyse events based on a status.

- Define the list (enumeration) of allowed values for your logging properties when it makes sense for consistent logging.

- Log the Message or Entity Type. When you are processing different message or entity types, you want to be able to filter by type.

- Log the relevant business information. Consider logging the minimum business information to be able to correlate log events to your business process. For instance, include the business entity id.

- Do not log sensitive data, that you don’t want operators to see. Don’t log data that requires to be encrypted at rest when your logging platform does not support it.

- Consider implementing logging checkpoints. When messages are processed in different steps, having defined logging checkpoints might help you to better correlate and troubleshoot events.

You don’t need to implement all these practices. Find the sweet spot so you provide the best value to the Ops team.

The Solution

Based on the scenario described above, the solution is composed by:

- One Http triggered Azure Function (SubmitOrder) that receives and validates the Order and then sends it to a Service Bus Queue.

- A Service Bus Queue used for temporal decoupling between the two functions. In a real scenario I would have used a Service Bus Topic with subscriptions to properly implement the Pub-Sub pattern, but let’s use a Queue for illustration purposes.

- A Service Bus Message triggered Azure Function (ProcessOrder) that processes the Order.

- Application Insights to collect all Logs and Telemetry from the Azure Functions.

The Solution Components

You can find the solution source code here. Below I will describe the different components. I hope that the code with the corresponding comments are clear and intuitive enough :)Logging Constants

Based on the practices I mentioned above, I’ve created aLoggingConstants class that contains my template and enumerations for consistent structured logging.

Submit Order

TheSubmitOrder function is triggered by an Http post and drops a message into a Service Bus message queue. Checks whether an order is valid and logs an event accordingly.

Process Order

TheProcessOrder function is triggered by a message on a Service Bus Queue. Simulates the Order processing and logs events accordingly.

Querying and Analysing the Structured Logging

Once the Azure Function is running and has started logging, you can use Analytics in Application Insights for querying your structured logs using the Azure Log Analytics Query Language (a.k.a. Kusto). Below I show some sample queries for the structure logging in the code above. I added some comments to the queries for clarification.

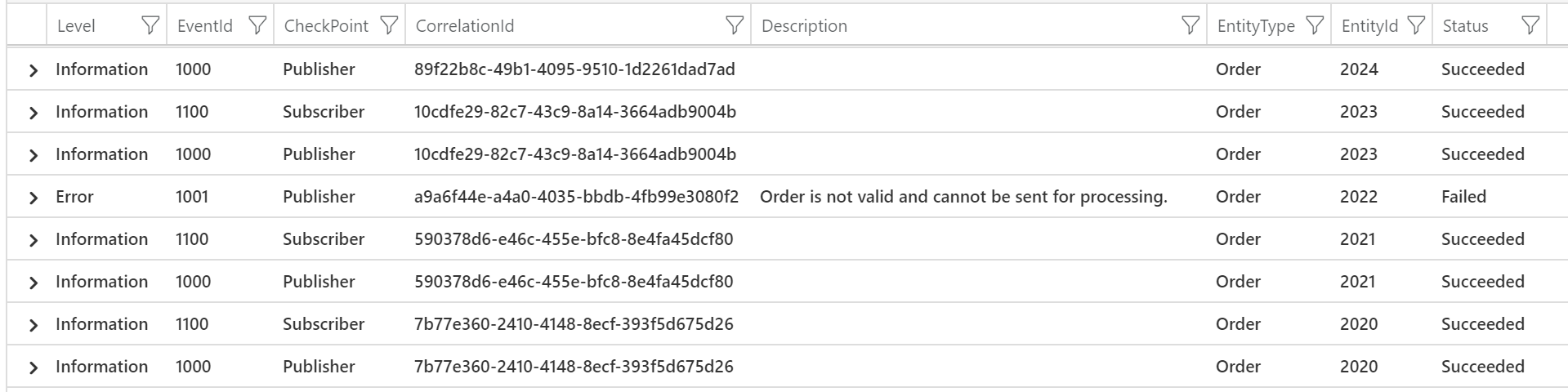

Get All Tracking Traces

This query returns all tracking events of our structured logging.

A sample of the result set is as follows

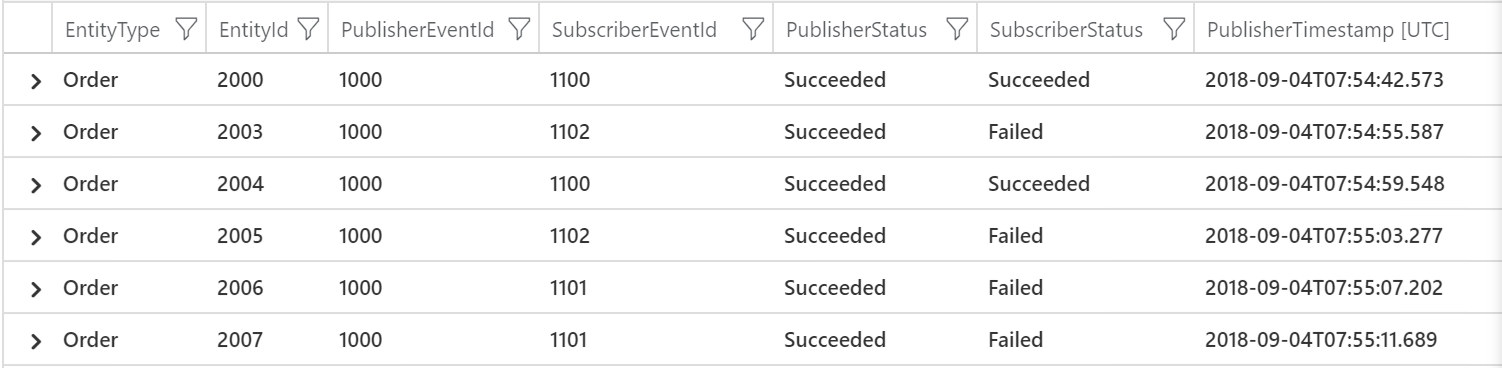

Correlated Traces

Returns correlated traces using the check points.

A sample of the result set is as follows

Correlated Traces by Entity Id

Gets the correlated traces for a particular Entity Id

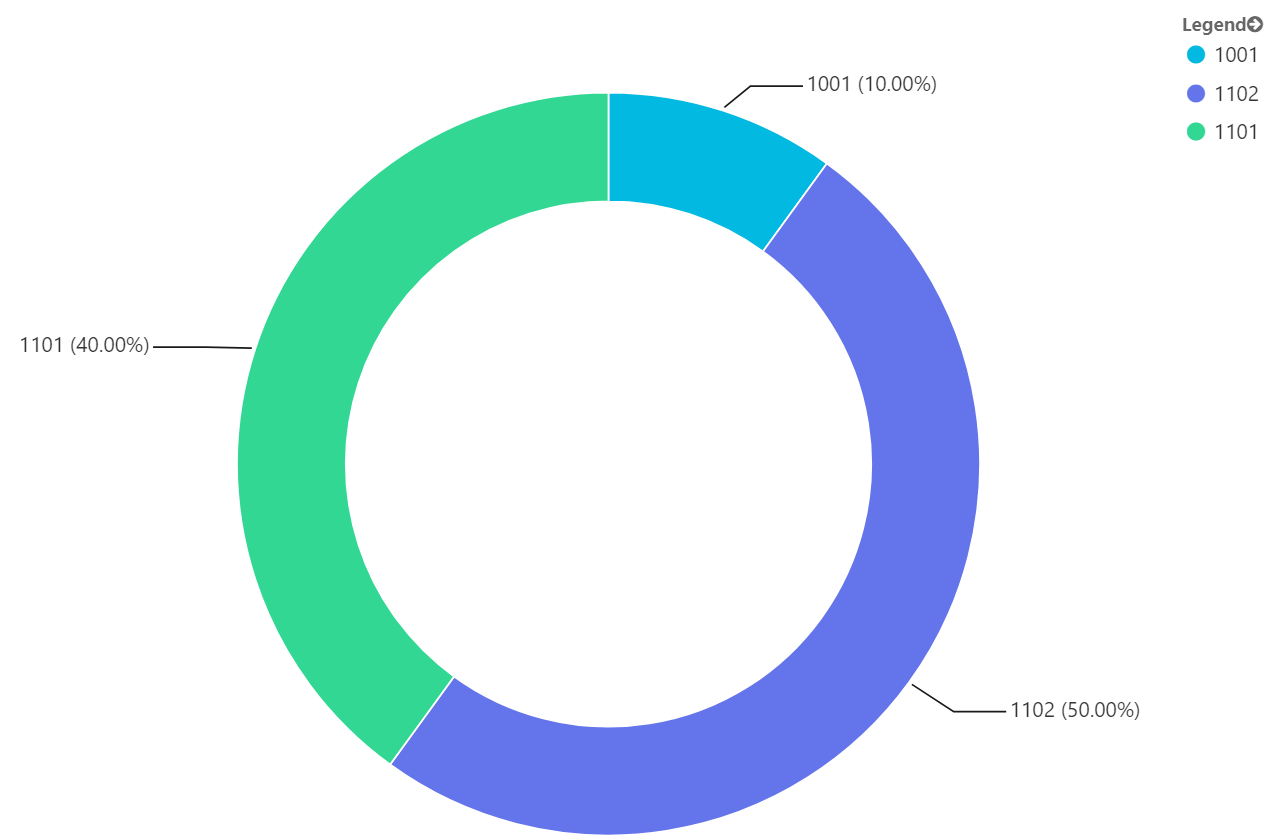

Occurrence of Errors

Get the occurrence of errors by Event Id

Publishing Application Insights Custom Queries and Charts

You can save and publish your Application Insights custom queries for yourself or to share so other users can easily access them. You can also include them in your custom Azure Dashboard. In a previous post I showed how to do it for Logic Apps, but you can so the same for your Azure Functions logging with Application Insights queries.

Wrapping Up

In this post, we saw how easy is to implement structured logging in Azure Functions using out-of-the-box capabilities with Application Insights. We also saw how to query and analyse our structure logging in Application Insights using its Query Language. I hope you have found this post handy. Please feel free to leave your feedback or questions below

Happy Logging!

Follow me on @pacodelacruz

Cross-posted on Deloitte Platform Engineering