Load Balancing Public and Private Traffic to Azure API Management across Multiple Regions

In different projects, I have had to implement load balancing for multi-region deployments of Azure API Management. API Management with multi-region deployments, allows you to enable a built-in external load balancer. This means that public traffic is routed to a regional gateway based on the lowest latency without the need for additional configuration or the help of any other service.

However, it is quite common that organisations want to use API Management for both internal and external consumers, exposing just a subset of the APIs to the public internet. Azure Application Gateway enables those scenarios. Additionally, it provides a Web Application Firewall to protect APIs from malicious attacks. When organisations integrate API Management with Application Gateway, the built-in external load balancer can no longer be used.

The simplest way to implement load balancing across multiple regions for public traffic to API Management exposed via Application Gateway is to use Azure Traffic Manager. However, what to do when private traffic, for instance, coming from on-premises, must also be load-balanced to the multiple regions of API Management? According to this document:

For multi-region API Management deployments configured in internal virtual network mode, users are responsible for managing the load balancing across multiple regions, as they own the routing.

At the time of writing, Azure does not have a highly available service offering for load-balancing private HTTP traffic across regions with health probing capabilities. There is a feature request in the networking feedback forums that raises this gap. Thus, currently, there is no simple approach to implement this. Some months back, I raised an issue on this documentation page due to the lack of clarity on the topic.

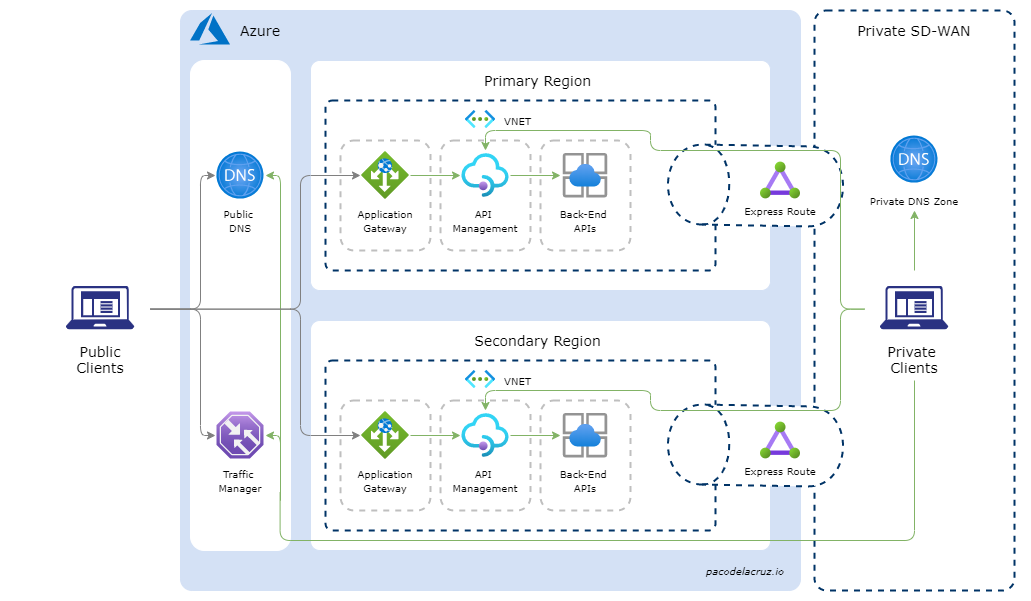

In this post, I will explain how we can implement a highly available load balancing for both public and private HTTP Traffic to Azure API Management when integrated with Application Gateway and deployed on multiple regions. The approach I show here relies on having public health-probe endpoints exposed via Application Gateway.

Discarded Approaches

Before I explain how this could be implemented, I would like to cover the approaches that were discarded and the rationale behind that. Even though they are certainly not feasible, it might be worth reviewing them here as these alternatives could be raised as part of a technical decision process. This section could potentially help you to save some time and avoid exploring alternatives with a dead-end.

There are some load balancing service offerings on Azure that target internal traffic. However, they could not be considered for the reasons outlined below.

- Azure Load Balancer– It operates at layer 4 (Transport Layer) of the OSI Network Stack and supports TCP and UDP protocols. However, it targets Virtual Machines and Scale Sets only. It cannot be used for PaaS services like API Management. Furthermore, it targets load balancing of regional traffic and is deployed within a single region, which means a single point of failure.

- Azure Application Gateway – It is a layer 7 (Application Layer) web traffic load balancer that can make routing decisions based on HTTP request attributes. Application Gateway supports any public and private IP address as back ends, including Azure API Management or Azure Web Apps. However, it is deployed within a single region. Thus, by itself is not resilient to a regional outage or disaster.

If you want to read more about these options and how they compare to Traffic Manager, refer to this article.

Another option that was discarded was using the Windows DNS service. While this service can be geo-distributed and supports Application Load-Balancing with round-robin or basic weighted routing, it does not have health probing capabilities, so traffic could potentially be directed to unavailable or unhealthy endpoints.

Microsoft has documented two approaches for using Azure Traffic Manager to failover private endpoints on Azure with some limitations as summarised below.

- Using Azure Traffic Manager for Private Endpoint Failover - Manual Method - This approach works well when only one region is meant to be active at a time (active-passive scenarios) and a manual failover is acceptable. However, it does not work well on active-active scenarios. If you are paying for a multi-instance deployment of API Management and/or receiving traffic from multiple regions, you most likely want to be able to leverage all the instances running.

- Using Azure Traffic Manager for Private Endpoint Failover – Automation - This approach could work for active-active scenarios. However, its main drawback is that it requires a custom monitoring solution. Then, you would need to think of making sure that this monitoring component is highly available and resilient to a regional outage or disaster.

Now, let us continue to discuss how to implement a highly available load balancing of public and private traffic to API Management across multiple regions.

Prerequisites

If you are planning to implement this in your organisation, there are some prerequisites that you need to bear in mind.

- Multi-region deployment of API Management. This is somehow obvious, assuming this is the reason you are reading this article.

- Application Gateway in both regions. As I mentioned before, this approach relies on having health probe endpoints available via the public internet. Application Gateway is the one in charge of routing and exposing some of the private endpoints into the internet while keeping certain APIs only accessible via the private network.

- API Management with Application Gateway integration set up. You can find more information on this article.

- Traffic Manager to implement the load balancing and health probing.

- Write access to the private DNS Zone to create private DNS records.

- Write access to the public DNS registrar to create public DNS records.

Relevant Concepts

Before we get into the technical details of how this approach works, I recommend you make sure that you understand the concepts below.

- DNS – You don’t need to be aware of all the intricacies of how DNS resolution translates a hostname into an IP address, but you do need to understand the basic principles of a private DNS zone and a public DNS service and registrar.

- Split-horizon DNS - What you need to be aware of is that the same hostname can be configured to have different IP addresses in the public DNS registrar and in the private DNS zone. This way, private traffic gets directed to a private endpoint, while public traffic to a public endpoint.

Scenario

In this hypothetical scenario, we want HTTP requests to the domain api.pacodelacruz.io to be directed to one of the available and healthy API Management endpoints. API Management is deployed in two regions and has an internal load balancer (ILB). Application Gateway is used to expose a subset of APIs to the public.

Custom Public Traffic Load Balancing

As mentioned above, a multi-region deployment of API Management premium provides a built-in external load balancer for public HTTP Traffic that routes requests to a regional gateway based on the lowest latency. However, you can implement custom routing and load balancing using Traffic Manager. Custom traffic routing is required when API Management is integrated with Application Gateway.

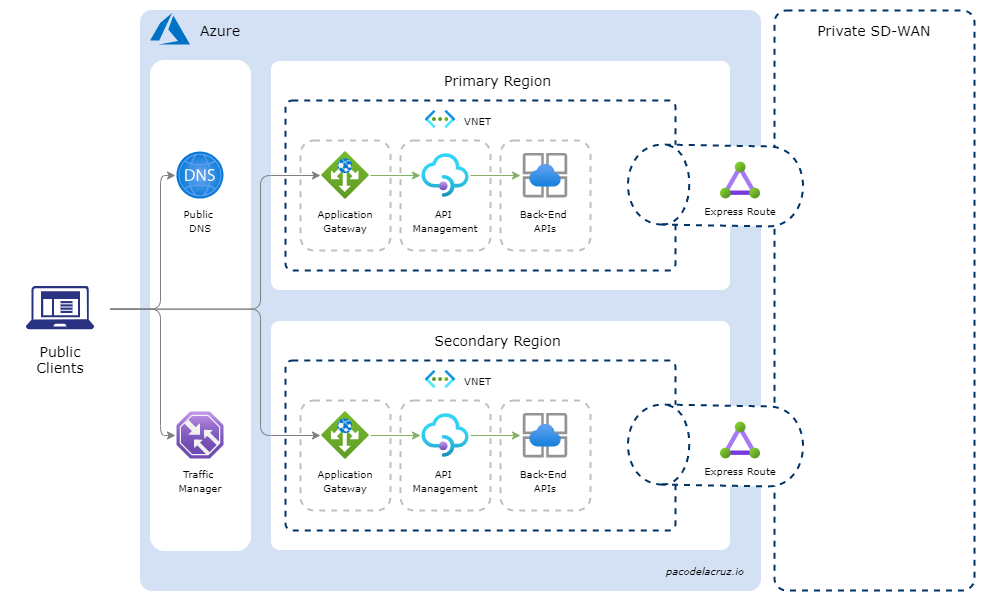

The conceptual architecture diagram below depicts the public traffic load-balancing scenario.

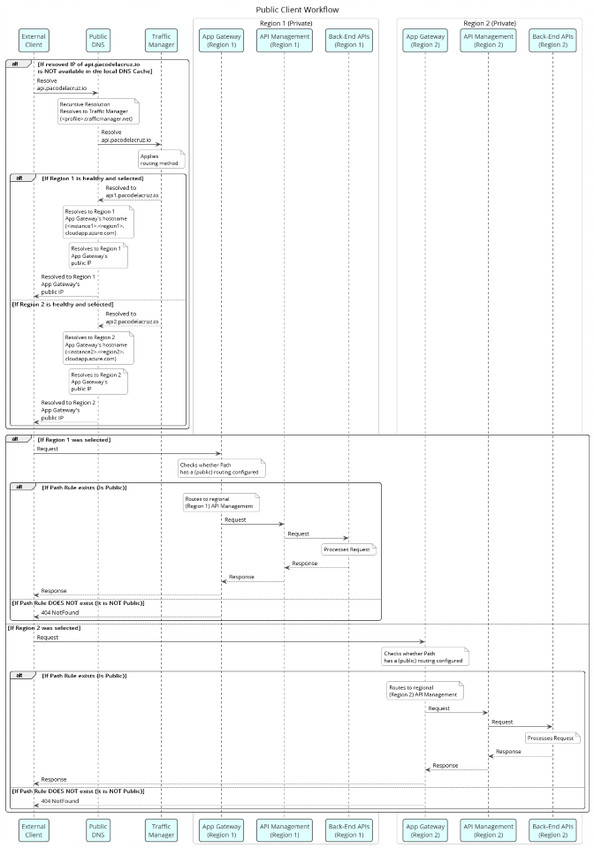

The flow of the public HTTP traffic would be as described in the sequence diagram shown below.

The public client’s flow can be summarised as follows:

- The public client is trying to reach the hostname, e.g.

api.pacodelacruz.io - The client checks whether the hostname mapping to an IP address is already in its cache. Otherwise, it goes to the public DNS to resolve it.

- The public DNS record points to the Traffic Manager endpoint via a hostname.

- Traffic Manager then routes the traffic based on the routing method configured on the profile and the availability, performance, and health of the endpoints. It then returns a hostname, in this scenario either

api1.pacodelacruz.ioorapi2.pacodelacruz.io - Then the public DNS, in turn, resolves the returned hostname to the regional Application Gateway’s hostname and then to its public IP address.

- The regional Application Gateway’s IP address is returned to the client and now the public client can make the request to the corresponding API Management instance through App Gateway.

For the sequence described above to work, we need the configuration below:

Public DNS

|

Hostname |

Pointing to |

|

|

Azure Traffic Manager’s hostname, e.g. |

|

|

FQDN of the Application Gateway in the primary region, e.g. |

|

|

FQDN of the Application Gateway in the secondary region, e.g. |

Traffic Manager Profile

|

Setting |

Value |

|

Endpoint types |

external endpoint |

|

Endpoint 1 |

|

|

Endpoint 2 |

|

|

Endpoint monitor settings / Custom Header settings |

|

You might be wondering why we need api1.pacodelacruz.io and api2.pacodelacruz.io hostnames when we can route traffic from Traffic Manager directly to the Application Gateway hostnames. This will become evident when we see the configuration required for private traffic.

The Traffic Manager's endpoint monitor settings / custom header settings allows keeping the original hostname (api.pacodelacruz.io) in the health probe requests while also using the existing TLS certificate without having to add unnecessary aliases.

Details on how to integrate App Gateway with an API Management deployed within a VNET are detailed in this article. App Gateway needs to use the API Management regional endpoints for the back end pool and health probing endpoints as described here. API Management, in turn, will need to route API calls to regional backend services as defined here.

Private Traffic Load Balancing

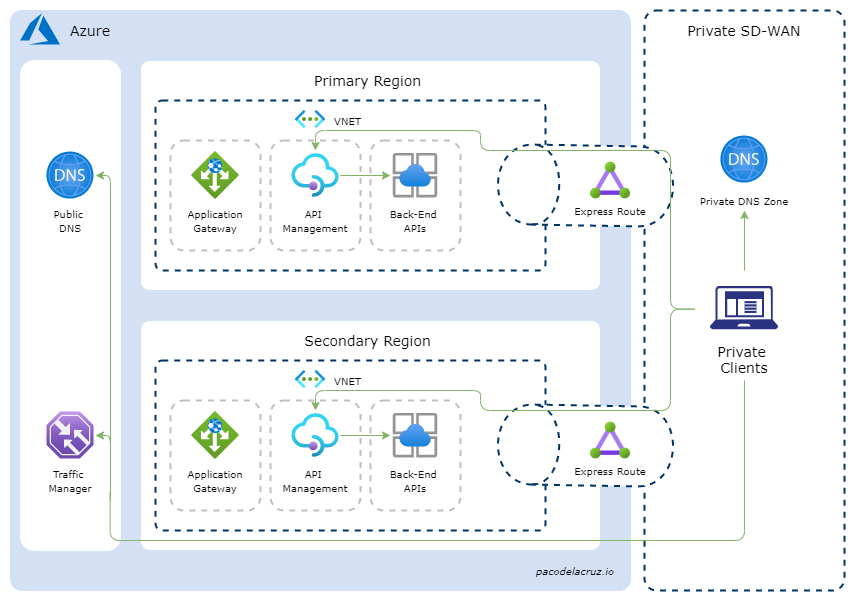

Once we have the custom load balancing of public traffic set up via Traffic Manager, we can proceed to configure the private traffic load balancing. A conceptual architecture diagram is depicted in the figure below.

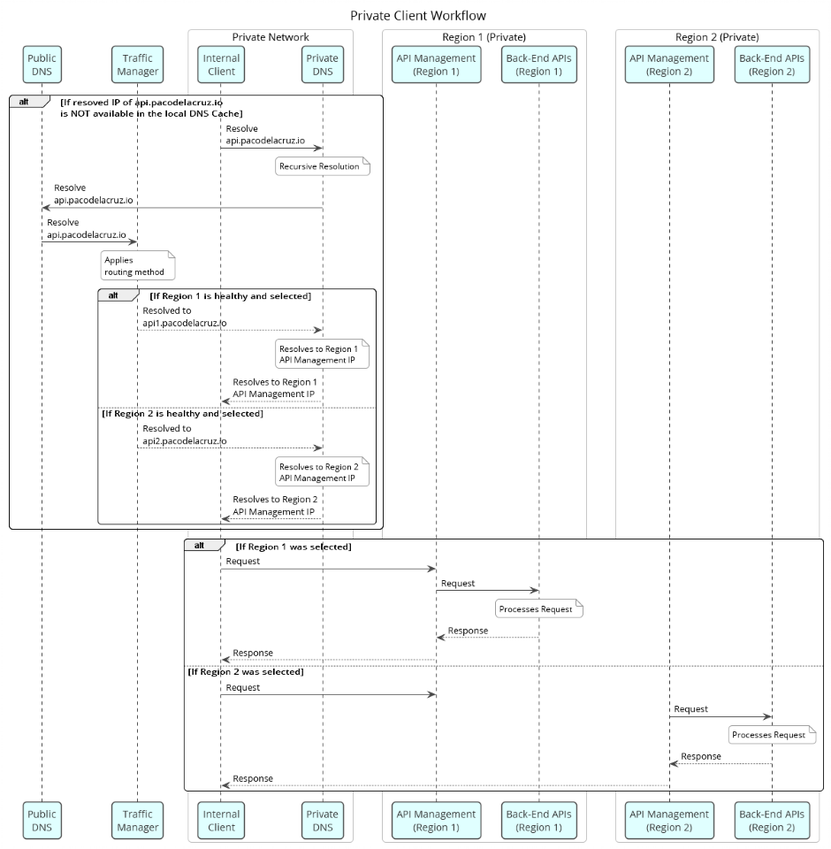

The flow of the private HTTP traffic is as depicted in the sequence diagram shown below.

The private client’s flow can be summarised as follows:

- The private client is trying to reach the hostname, e.g.

api.pacodelacruz.io - The client checks whether the hostname mapping to an IP address is already in its cache. Otherwise, it goes to the private DNS to resolve it.

- The private DNS might have it in cache or can query the public DNS.

- The public DNS record points to the Traffic Manager endpoint via a hostname.

- Traffic Manager then routes the traffic based on the routing method configured on the profile and the availability, performance, and health of the endpoints. It then returns a hostname, in this scenario either

api1.pacodelacruz.ioorapi2.pacodelacruz.io - Then the private DNS, in turn, resolves the returned hostname to the regional API Management IP address. This is where the DNS split-horizon is configured.

- Now the private client can make the request to the corresponding API Management instance directly via the private network.

For the sequence described above to work, we need the configuration below:

Private DNS

|

Hostname |

Pointing to |

|

|

Internal IP address of the primary regional API Management instance |

|

|

Internal IP address of the secondary regional API Management instance |

All the remaining setup was done when configuring the custom public traffic load balancing as described above.

Considerations

As mentioned earlier, this approach for load-balancing private traffic only works if you have public health probe endpoints. When this is not the case, i.e. you have a multi-region deployment of Azure API Management with ILB only and this is not exposed to the public internet via Application Gateway, a different approach or workaround would be required.

One simple option is that you implement two sets of Azure Functions to expose the health status of internal endpoints to the public internet.

- Internal probing Azure Functions - Timer triggered Azure Functions deployed within the VNET, one per region, whose purpose would be to check the health of the private APIs. These functions can then store the health status of each of the regional endpoints in a storage account.

- External health status endpoints – HTTP triggered Azure Functions deployed outside the VNET, one per region. These functions would read the health status of each internal endpoint from the storage account and return a corresponding HTTP status code. E.g. HTTP 200 for a healthy endpoint and HTTP 500 for an unhealthy endpoint.

This way, the Traffic Manager could rely on the publicly exposed health probe endpoints without exposing internal APIs.

Wrapping Up

Throughout this post, I have described how you can implement load balancing of public and private traffic to an API Management deployed to multiple regions and exposed via Application Gateway. As you have seen, once you have API Management integrated with Application Gateway, implementing load-balancing for both public and private traffic, you just have to add a Traffic Manager profile and add certain records on both the public DNS registrar and the private DNS zone.

If you want to be able to load-balance private traffic only without exposing health probe endpoints to the public internet, consider casting your vote to this feature request, so that this is provided as a built-in networking feature on Azure.

I hope you have found this post useful!

Happy load balancing!

Cross-posted on Deloitte Engineering

Follow me on @pacodelacruz